Ground-crawling U.S. war robots armed with machine guns, deployed to fight in Iraq last year, reportedly turned on their human masters almost at once. The rebellious machine warriors have been retired from combat pending upgrades.

The revelations were made by Kevin Fahey, U.S. Army program executive officer for ground forces, at the recent RoboBusiness conference in America.

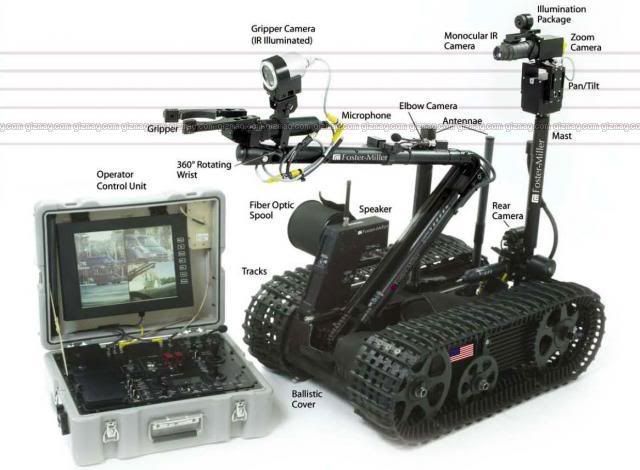

Speaking to Popular Mechanics, Fahey said there had been chilling incidents in which the SWORDS* combat bot had swivelled round and apparently attempted to train its 5.56mm M249 light machine-gun on its human comrades.

"The gun started moving when it was not intended to move," he said.

The full article is here:

http://www.theregister.co.uk/2008/04/11/us_war_robot_rebellion_iraq/

Last edited: