-

Welcome! The TrekBBS is the number one place to chat about Star Trek with like-minded fans.

If you are not already a member then please register an account and join in the discussion!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Looming global arms race to create superintelligent AI

- Thread starter RAMA

- Start date

I agree with this attitude toward self-organizing complexity in general. Although it should be noted that brains are not fractals; they are not self-similar at all scales. Nor are they completely self-organizing but receive developmental guidance from the genome and from environmental cues."Emergence" is a fancy way of saying "I don't know. It just happened." Complex patterns in fractals are data, but not information, like DNA, for example.

Repeat after me. There is no such thing as a superintelligent AI.

Yes, right now of course. It's presupposing accelerated change of course which is a fact.

"Accelerated change" isn't happening in AI and never has been. Sorry.

As a product of info-technology it is subject to accelerated change. There's really no question of this at all.

I posted a graph last year that shows the progress in AI paralleling the biological and technological..to mark the occasion of reaching mouse level intellgence in AI recently (exactly as predicted beforehand by Kurzweil and others). Human beings have successfully bridged a billion year evolutionary gap in a few decades of research, despite an "AI Winter" where both $ and advances stagnated. The long term progress curve is still accelerated. Now money and advances flood the field.

http://www.huffingtonpost.com/wait-...-the-road-to-superintelligence_b_6648480.html

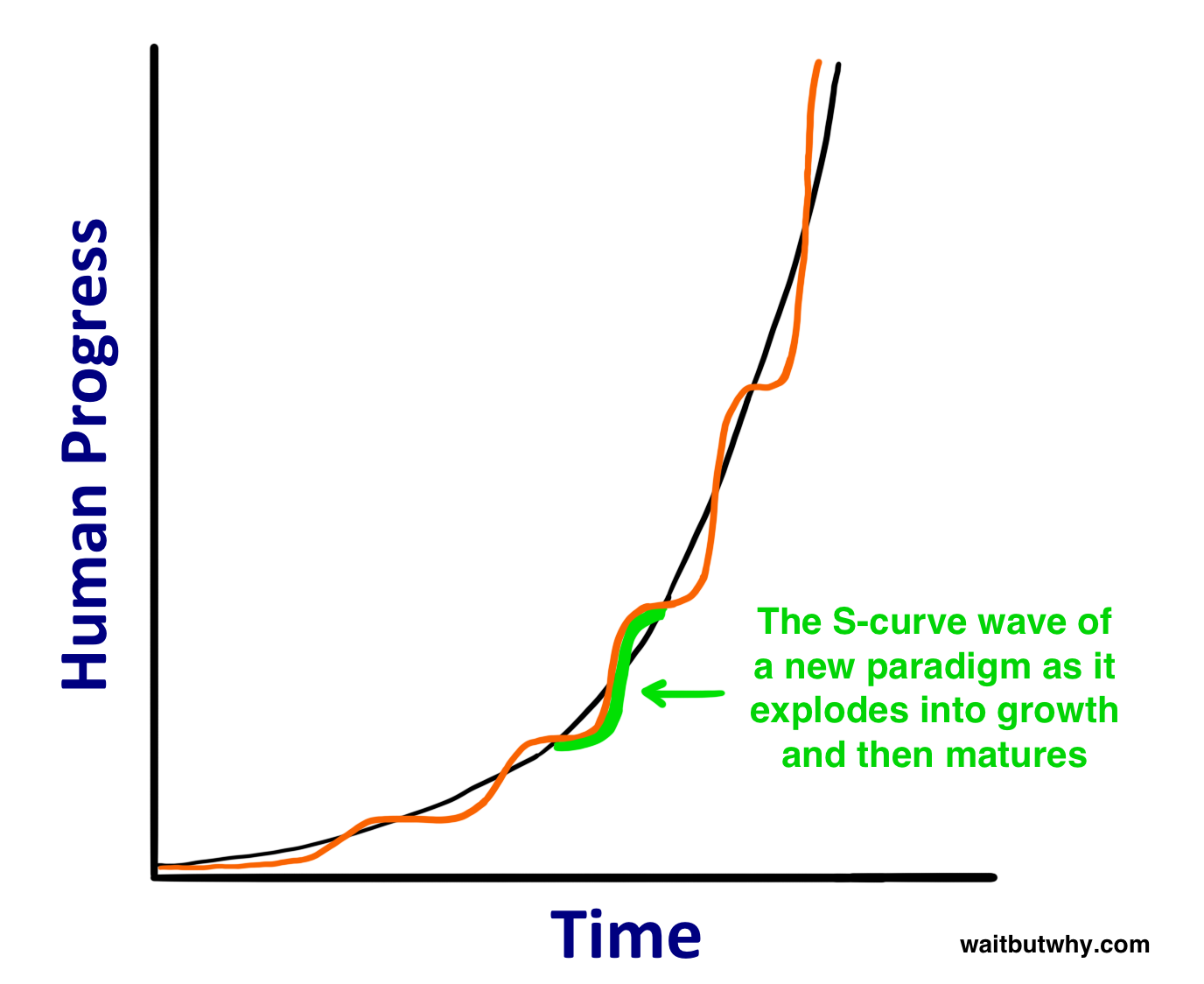

http://singularityhub.com/2015/02/0...-future-is-arriving-far-faster-than-expected/An "S" is created by the wave of progress when a new paradigm sweeps the world. The curve goes through three phases:

If you look only at very recent history, the part of the S-curve you're on at the moment can obscure your perception of how quickly things are advancing. The chunk of time between 1995 and 2007 saw the explosion of the Internet; the introduction of Microsoft, Google, and Facebook into the public consciousness; the birth of social networking; and the introduction of cellphones and then smartphones. That was Phase 2, the growth-spurt part of the S. But 2008 to 2015 has been less groundbreaking, at least on the technological front. Someone thinking about the future today might examine the last few years to gauge the current rate of advancement, but that's missing the bigger picture. In fact, a new, huge Phase 2 growth spurt might be brewing right now.

- Slow growth (the early phase of exponential growth)

- Rapid growth (the late, explosive phase of exponential growth)

- A leveling off as the particular paradigm matures

3) Our own experience makes us stubborn old men about the future. We base our ideas about the world on our personal experience, and that experience has ingrained the rate of growth of the recent past in our heads as "the way things happen." We're also limited by our imagination, which takes our experience and uses it to conjure future predictions -- but often, what we know simply doesn't give us the tools to think accurately about the future.1 When we hear a prediction about the future that contradicts our experience-based notion of how things work, our instinct is that the prediction must be naive. If I tell you, later in this post, that you may live to be 150, or 250, or not die at all, your instinct will be, "That's stupid. If there's one thing I know from history, it's that everybody dies." And yes, no one in the past has not died. But no one flew airplanes before airplanes were invented either.

So while "naaah" might feel right as you read this post, it's probably actually wrong. The fact is that if we're being truly logical and expecting historical patterns to continue, we should conclude that much, much, much more should change in the coming decades than we intuitively expect. Logic also suggests that if the most advanced species on a planet keeps making larger and larger leaps forward at an ever-faster rate, at some point, they'll make a leap so great that it completely alters life as they know it and the perception they have of what it means to be a human -- kind of like how evolution kept making great leaps toward intelligence until finally it made such a large leap to the human being that it completely altered what it means for any creature to live on planet Earth. And if you spend some time reading about what's going on today in science and technology, you start to see a lot of signs quietly hinting that life as we know it cannot withstand the leap that's coming next.

-----------------Used to be, folks were way too bullish about technology and way too optimistic with their predictions. Flying cars and Mars missions being two classic—they should be here by now—examples. The Jetsons being another.

But today, the exact opposite is happening.

$ in AI:[COLOR=#FFFFFF]You mentioned the AI tool kit. Hasn’t AI failed to live up to its expectations?[/COLOR]

[COLOR=#FFFFFF][/COLOR]

[COLOR=#FFFFFF]Kurzweil

[/COLOR]

There was a boom and bust cycle in AI during the 1980s, similar to what we saw recently in e-commerce and telecommunications. Such boom-bust cycles are often harbingers of true revolutions; recall the railroad boom and bust in the 19th century. But just as the Internet “bust” was not the end of the Internet, the so-called “AI Winter” was not the end of the story for AI either. There are hundreds of applications of “narrow AI” (machine intelligence that equals or exceeds human intelligence for specific tasks) now permeating our modern infrastructure. Every time you send an email or make a cell phone call, intelligent algorithms route the information. AI programs diagnose electrocardiograms with an accuracy rivaling doctors, evaluate medical images, fly and land airplanes, guide intelligent autonomous weapons, make automated investment decisions for over a trillion dollars of funds, and guide industrial processes. These were all research projects a couple of decades ago. If all the intelligent software in the world were to suddenly stop functioning, modern civilization would grind to a halt. Of course, our AI programs are not intelligent enough to organize such a conspiracy, at least not yet.

http://nextbigfuture.com/2014/03/quantum-computing-and-new-approaches-to.html

And of course, the always important:

Law of accelerating returns

https://lifeboat.com/ex/law.of.accelerating.returns

Extremely classical and highly outdated view:

From

THE DOOR INTO SUMMER by Robert Heinlein

2001: A SPACE ODYSSEY by Stanley Kubrick and Arthur C. Clarke...in my opinion a machine can never have judgment; the side circuit is a hunting circuit, the programming of which says "look for so-and-so within such-and-such limits; when you find it, carry out your basic instruction."

Interviewer: Do you believe that Hal has genuine emotions?

Astronaut David Bowman: Well, he acts like he has genuine emotions. He's programmed that way to make it easier for us to talk to him. As to whether or not he has feelings is something I don't think anyone can truthfully answer.

Whatever kind of "AI" you are talking about, it sure isn't the stuff that'll lead to mind uploading and the Singularity.

Whatever kind of "AI" you are talking about, it sure isn't the stuff that'll lead to mind uploading and the Singularity.

Neither is the new link either.

Seriously, a program that can follow numbers, then execute one command based on it being high enough.

That's nowhere near intelligent nevermind anything remotely sentient.

Well there's two kinds of A.I., weak and strong. This would probably be weak A.I. and we'll almost certainly have that in the next few decades. It's just going to crunch numbers, it's not going to paint a picture. I do think that eventually we'll develop strong A.I. unless we screw up and technology stops advancing. Given enough time and research, whether it's 10 years or 1,000 we're going to do it. I don't think there is anything magical about the ability to think, we just haven't figured it out and future generations will hopefully be smarter than us.Whatever kind of "AI" you are talking about, it sure isn't the stuff that'll lead to mind uploading and the Singularity.

Neither is the new link either.

Seriously, a program that can follow numbers, then execute one command based on it being high enough.

That's nowhere near intelligent nevermind anything remotely sentient.

I don't think it's really a question of if we can create it, but should we create it. It would solve a lot of problems, but create new ones that we haven't even thought of. We could get it to stop email spam and it decides that since humans send spam, that it needs to stop us. That doesn't mean it's stupid, it just means that it doesn't think like we do. If mosquitoes spread disease, we try to wipe out the mosquitoes. So I don't know if we could have a philosophical discussion with an A.I., but there are plenty of people who can't do that with and they aren't idiots.

No it isn't. LOTS of things are products of information technology but are not subject to accelerated change, but merely accelerated proliferation. Cat gifs, or example, are not experiencing accelerated proliferation in either size or sophistication, there's just a hell of a lot more OF them.Yes, right now of course. It's presupposing accelerated change of course which is a fact.

"Accelerated change" isn't happening in AI and never has been. Sorry.

As a product of info-technology it is subject to accelerated change.

It is also worth pointing out that accelerated growth in IT is not causing accelerated growth in HUMANS. This is at least one indication that INTELLIGENCE is not actually a product of Information Technology and that an additional factor needs to be introduced in order for this to be the case.

Really? Because everybody but YOU is questioning it.There's really no question of this at all.

Not to be confused with the law of diminishing returns, which you prefer to ignore because you don't believe it will come into play.And of course, the always important:

Law of accelerating returns

It says:

Hong Kong start-up to bet millions on hedge fund run by artificial intelligence.

The words that caught your attention: "Artificial intelligence."

The words that you overlooked:

"Hong Kong"

"Startup"

"To"

"Bet"

"Hedge fund"

In other words this is a rich guy who thinks he has an easy way to get a little bit richer.

You don't need an AI to do any of the things he's claiming it needs to do, and CALLING it an AI is really just a play on buzzwords. The article eventually gets around to using the term "quantitative software" which is what he's ACTUALLY referring to. The only special thing about this company is that they're trying to make predictions further in advance, which everyone in the universe is already trying to do.

Even strong AI would tend to function within the parameters and the context of its programming. If you set a strong AI to stop all spam on the internet, the worst it can do is delete the accounts of everyone who spams. Going after the spammers in meatspace is WAY beyond the purview of its task; its starting parameters would have to include that in the pursuit of its goals.Well there's two kinds of A.I., weak and strong. This would probably be weak A.I. and we'll almost certainly have that in the next few decades. It's just going to crunch numbers, it's not going to paint a picture. I do think that eventually we'll develop strong A.I. unless we screw up and technology stops advancing. Given enough time and research, whether it's 10 years or 1,000 we're going to do it. I don't think there is anything magical about the ability to think, we just haven't figured it out and future generations will hopefully be smarter than us.Whatever kind of "AI" you are talking about, it sure isn't the stuff that'll lead to mind uploading and the Singularity.

Neither is the new link either.

Seriously, a program that can follow numbers, then execute one command based on it being high enough.

That's nowhere near intelligent nevermind anything remotely sentient.

I don't think it's really a question of if we can create it, but should we create it. It would solve a lot of problems, but create new ones that we haven't even thought of. We could get it to stop email spam and it decides that since humans send spam, that it needs to stop us. That doesn't mean it's stupid, it just means that it doesn't think like we do. If mosquitoes spread disease, we try to wipe out the mosquitoes. So I don't know if we could have a philosophical discussion with an A.I., but there are plenty of people who can't do that with and they aren't idiots.

To be blunt, no matter how smart your AI is, if you haven't taught it how to function within a defined framework (this internet, that server cluster, this battlefield, that room) then you have created something relatively useless and irritating. When I tell a strong AI to do the dishes, I don't want to come home and find out it has washed every dish in Greater Chicago just because I didn't specify the fucking thing should only wash MY dishes. No, I'm gonna take that robot BACK to the Apple Store and get a refund, and probably so will a lot of other people, and the lead programmer of the thing is going to get fired for incompetence.

Corollary: A superintelligent AI, given the task of eliminating spam on the internet, would probably come back at you 15 seconds later with a report on the possible economic impact of eliminating spam and point out to you that reducing spam would actually create more harm than good and "Here's a link to an open source anti-spam program that any idiot can install. You're welcome."

Strong AI is at least 50 years, if not hundreds of years away. I have no doubt Strong AI will eventually be created. All the work that's currently been done on weak AI has contributions towards developing strong AI. In fact, writing that "Machine Learning 101" thread had given me a few ideas as to how strong AI might eventually work.

And when we get Strong AI, we are going to discover that its thought process would probably have as many flaws as any sentient human being would have. Its not going to anticipate every possible outcome. Its not going to come up with creative, workable solutions on a consistent basis.

Its not going to solve all our problems simply because because there are problems that have an infinite number of possible outcomes. Such problems would take forever to solve because of combinatorial explosion. Regardless of how smart the AI is, it can never beat the problem of combinatorial explosion. And the sad thing is, there's probably an infinite number of problems with infinite number of possibilities.

So while I believe Strong AI is a possibility, "superintelligent" AI is really just a fantasy thought up by people who frankly didn't put much thought into the idea.

I like to point out that everything in the universe can be thought of as number crunching. Physics defines everything in terms of Energy. Computer Science defines everything in terms of Information. Yet the formula used in Physics for changes in energy, Entropy, is nearly identical to Information Theory's formula. In other words, Energy IS Information.

Its therefore probably not surprising that there are quite a few AI algorithms that use ideas from Physics. And why AI often uses a famous physicst's name in our algorithms... like Gibbs Sampling, Restricted Boltzmann Machines, Tikhonov Regularization.

And when we get Strong AI, we are going to discover that its thought process would probably have as many flaws as any sentient human being would have. Its not going to anticipate every possible outcome. Its not going to come up with creative, workable solutions on a consistent basis.

Its not going to solve all our problems simply because because there are problems that have an infinite number of possible outcomes. Such problems would take forever to solve because of combinatorial explosion. Regardless of how smart the AI is, it can never beat the problem of combinatorial explosion. And the sad thing is, there's probably an infinite number of problems with infinite number of possibilities.

So while I believe Strong AI is a possibility, "superintelligent" AI is really just a fantasy thought up by people who frankly didn't put much thought into the idea.

This would probably be weak A.I. and we'll almost certainly have that in the next few decades. It's just going to crunch numbers, it's not going to paint a picture.

I like to point out that everything in the universe can be thought of as number crunching. Physics defines everything in terms of Energy. Computer Science defines everything in terms of Information. Yet the formula used in Physics for changes in energy, Entropy, is nearly identical to Information Theory's formula. In other words, Energy IS Information.

Its therefore probably not surprising that there are quite a few AI algorithms that use ideas from Physics. And why AI often uses a famous physicst's name in our algorithms... like Gibbs Sampling, Restricted Boltzmann Machines, Tikhonov Regularization.

And when we get Strong AI, we are going to discover that its thought process would probably have as many flaws as any sentient human being would have. Its not going to anticipate every possible outcome. Its not going to come up with creative, workable solutions on a consistent basis.

It will probably be enough that it might think differently than humans—assuming humans are open-minded enough to listen to some of the crazy ideas spilling out and say, "Y'know, that just might work. Let's try it."

I like to point out that everything in the universe can be thought of as number crunching.

...

Its therefore probably not surprising that there are quite a few AI algorithms that use ideas from Physics.

It's equally surprising that the Big Bang uses ideas from religion (creation ex nihilo, the Hindu cyclic universe, etc.). Gee, those mystics were right all along! /s

Whatever kind of "AI" you are talking about, it sure isn't the stuff that'll lead to mind uploading and the Singularity.

Neither is the new link either.

Seriously, a program that can follow numbers, then execute one command based on it being high enough.

That's nowhere near intelligent nevermind anything remotely sentient.

Lots of real experts disagree with you. As my quotes and links demonstrate. I think I'll judge them based on that.

But even a self-emergent AI is not the only path to strong AI, it's just one that a lot of propenets are saying could suprise us, as opposed to a dedicated effort to create strong AI.

But even a self-emergent AI is not the only path to strong AI, it's just one that a lot of propenets are saying could suprise us, as opposed to a dedicated effort to create strong AI.Strong AI is at least 50 years, if not hundreds of years away. I have no doubt Strong AI will eventually be created. All the work that's currently been done on weak AI has contributions towards developing strong AI. In fact, writing that "Machine Learning 101" thread had given me a few ideas as to how strong AI might eventually work.

Yes, this is the conventional view, even a good number of those in the field see it that way still, but as we know that's not how it works, accelerated change will make it happenb much faster than a couple of hundred years.

You heard it here first: RAMA knows better than actual AI researchers.

Dunning-Kruger in full force here.

Dunning-Kruger in full force here.

Dunning-Kruger

You heard it here first: RAMA knows better than actual AI researchers.

Dunning-Kruger in full force here.

Fanatical devotion to an insane ideal will do that.

Lots of "real experts" disagree with the statement that "a program that can follow numbers then execute one command based on [the number] being high enough" is "nowhere near intelligent nevermind anything remotely sentient?"Whatever kind of "AI" you are talking about, it sure isn't the stuff that'll lead to mind uploading and the Singularity.

Neither is the new link either.

Seriously, a program that can follow numbers, then execute one command based on it being high enough.

That's nowhere near intelligent nevermind anything remotely sentient.

Lots of real experts disagree with you.

That is a VERY specific claim on your part; would you care to double down?

Really? The previous paragraph claimed that the experts disagreed with this very statement. Now you're saying this is the "conventional view?"Strong AI is at least 50 years, if not hundreds of years away. I have no doubt Strong AI will eventually be created. All the work that's currently been done on weak AI has contributions towards developing strong AI. In fact, writing that "Machine Learning 101" thread had given me a few ideas as to how strong AI might eventually work.

Yes, this is the conventional view

It is as if you are using the word "experts" to refer to people who don't know what the hell they're talking about (Kurzweil et al) and "conventional wisdom" to refer to people who do.

"Nearly all of them" is a good number, yes.even a good number of those in the field see it that way still

You have a mouse in your pocket? Who is "we"?but as we know that's not how it works

If you want to know what "Intelligence" is, perhaps this is a good starting point.

[yt]https://www.youtube.com/watch?v=AZX6awZq5Z0[/yt]

[yt]https://www.youtube.com/watch?v=AZX6awZq5Z0[/yt]

Whatever kind of "AI" you are talking about, it sure isn't the stuff that'll lead to mind uploading and the Singularity.

Neither is the new link either.

Seriously, a program that can follow numbers, then execute one command based on it being high enough.

That's nowhere near intelligent nevermind anything remotely sentient.

Hm, sounds less complicated than some game-AIs/NPCs...

If you are not already a member then please register an account and join in the discussion!